DEX Guide

Main Navigation

DEX Users Guide

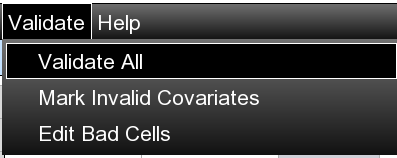

Validate Menu Commands

The VALIDATE menu provides tools to check the quality and consistency of your data. These validation functions help ensure your dataset meets required standards before analysis.

-

DATA RULES: Apply predefined validation rules to your dataset. Rules can check for valid ranges, required fields, formatting standards, and more.

-

IDENTIFY OUTLIERS: Automatically detect statistical outliers in numerical columns using various detection methods including Z-score, IQR (Interquartile Range), and other algorithms.

-

FIND DUPLICATES: Identify and highlight duplicate records in your dataset based on selected columns or entire rows.

-

CHECK MISSING VALUES: Scan your dataset for missing values and generate a comprehensive report on data completeness.

-

VALIDATE DATES: Verify that date columns contain valid, well-formatted dates within expected ranges.

Working with Data Rules

Creating Data Validation Rules

Rule Builder

DEX provides a visual rule builder that allows you to create validation rules without writing code:

- Select DATA RULES from the VALIDATE menu

- Click New Rule to open the rule builder dialog

- Select columns to validate and set appropriate conditions

- Save your rule with a descriptive name

Rules can be saved and reused across multiple datasets.

Advanced Rule Definition

For complex validation needs, DEX supports formula-based rules:

// Example rule expressions

[Age] >= 18 AND [Age] <= 65

[Price] > 0 AND [Stock] >= 0

ISDATE([BirthDate]) AND [BirthDate] <= TODAY()

LEN([ZipCode]) = 5 OR LEN([ZipCode]) = 10

[Email] MATCHES '^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$'

These rules can be combined to create comprehensive validation checks.

Applying Validation Rules

Validation Process

After creating rules, you can validate your data in three ways:

- Interactive Validation: Validates data as you enter it, highlighting issues in real-time

- Batch Validation: Processes all rules against the entire dataset at once

- Scheduled Validation: For automated workflows, validates data on a set schedule

Validation results are displayed in a detailed report showing:

- Number of records passing/failing validation

- Specific cells with validation errors

- Suggested fixes for common issues

Finding Duplicates and Outliers

Duplicate Detection

DEX offers several methods for finding duplicate records:

- Exact Match: Finds identical records across all columns

- Column-Based: Identifies duplicates based on selected key columns

- Fuzzy Match: Detects near-duplicates using similarity algorithms

After detection, duplicates can be:

- Highlighted for manual review

- Marked with a status flag

- Automatically removed (with confirmation)

- Exported to a separate dataset

Outlier Detection

Outliers can distort analysis results. DEX provides multiple detection methods:

- Z-Score: Identifies values beyond standard deviation thresholds

- IQR (Interquartile Range): Detects values outside Q1-1.5*IQR and Q3+1.5*IQR

- Percentile: Flags values beyond specified percentile ranges

- Modified Z-Score: Robust method less affected by extreme values

Detected outliers can be:

- Highlighted in the data view

- Filtered for closer examination

- Automatically capped at threshold values

- Visualized in special outlier charts

Handling Missing Values

Missing data can significantly impact analysis. DEX helps identify and address missing values through a systematic approach.

| Step | Action | Description |

|---|---|---|

| 1 | Detect | Scan the entire dataset for NULL values, empty strings, or custom-defined "missing" values (e.g., "N/A", "-999") |

| 2 | Analyze | Generate a missing value report showing patterns and statistics (% missing by column, groups, etc.) |

| 3 | Visualize | Create missing value heat maps to visualize patterns of missingness across the dataset |

| 4 | Address | Apply various strategies to handle missing values based on analysis results |

Missing Value Handling Options

- Remove rows with missing values

- Fill with constants (0, -1, etc.)

- Replace with mean/median/mode

- Use interpolation (linear, spline)

- Apply predictive models to estimate values

- Forward/backward fill for time series

- Flag missing with indicator variables

- Group-based imputation by category

- Create special category for missingness

Validation Best Practices

Validation Workflow

- Always validate before important analysis

- Create a standard validation template for frequently used datasets

- Document validation rules and their business justifications

- Address critical data quality issues before moving to analysis

- Save validation reports for audit and traceability

Common Pitfalls

- Treating all outliers as errors without investigation

- Removing too many incomplete records, creating selection bias

- Applying inappropriate imputation methods for missing values

- Creating overly strict validation rules that flag valid business exceptions

- Failing to communicate data quality issues to stakeholders